Reconocimiento facial con face-api.js y react

Javascript

Pablo Vallecillos

Si alguna vez te has preguntado como podemos detectar nuestra cara desde una página web o una aplicación móvil ¡ Este es tu post !.

Para ello utilizaremos una librería de javascript React y face-api.js que nos permite reconocer caras, rasgos faciales y expresiones dentro del vídeo capturado por una webcam.

Comencemos…

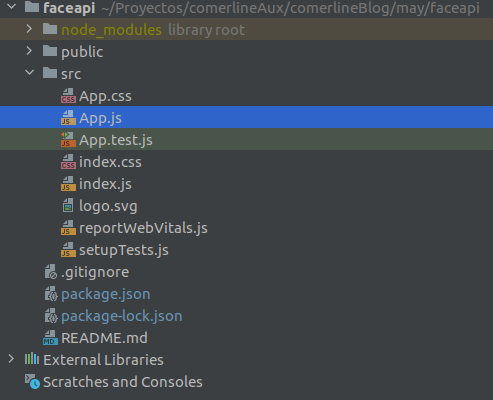

1- Creamos un nuevo proyecto de react con:npx create-react-app .

2- Comprobamos que todo funciona correctamente en nuestro local, para ello levantamos un servidor de desarrollo en http://localhost:3000 con el comando: npm start

Llegados a este punto:

3- Nuestro primer paso será descargar la dependencia que usaremos, en este caso face-api.js

JavaScript API for face detection and face recognition in the browser implemented on top of the tensorflow.js core API (tensorflow/tfjs-core)

Lanzamos el comando:

npm i face-api.js

Seguidamente nos situamos en App.js, usaremos este componente para desarrollar la funcionalidad esperada:

4- En primer lugar importamos el paquete que nos ayuda a detectar la cara y 2 hooks de react:

import * as faceApi from 'face-api.js';

import {useEffect, useRef} from 'react';

5- Definimos el tamaño de nuestro video

const videoWidth = 640;

const videoHeight = 480;

6- El hook useRef devuelve un objeto ref mutable cuya propiedad .current se inicializa con el argumento pasado (initialValue).

El objeto devuelto persistirá durante toda la vida del componente.

const videoRef = useRef();

const canvasRef = useRef();

7- Seguidamente llamamos al hook useEffect (la función pasada a useEffect se ejecutará después de que el renderizado de un componente es confirmado en la pantalla), para que ejecute la función que carga los modelos que usaremos con face-api.js.

Una vez cargados accedemos a nuestra webcam para asignársela a nuestra etiqueta <video>.

// The function passed to useEffect will run after the render is committed to the screen.

useEffect(() => {

const loadModels = async () => {

const MODEL_URL = `http://localhost:3000/models`;

// First we load the necessary models used by face-api.js located in /public/models

// https://github.com/justadudewhohacks/face-api.js-models

Promise.all([

faceApi.nets.tinyFaceDetector.loadFromUri(MODEL_URL),

faceApi.nets.faceLandmark68Net.loadFromUri(MODEL_URL),

faceApi.nets.faceRecognitionNet.loadFromUri(MODEL_URL),

faceApi.nets.faceExpressionNet.loadFromUri(MODEL_URL),

faceApi.nets.ageGenderNet.loadFromUri(MODEL_URL),

faceApi.nets.ssdMobilenetv1.loadFromUri(MODEL_URL),

]).then(async () => {

try {

// With HTML5 came the introduction of APIs with access to device hardware, including the MediaDevices API.

// This API provides access to multimedia input devices such as audio and video.

// Since React does not support the srcObject attribute,

// we use a ref to target the video and assign the stream to the srcObject property.

videoRef.current.srcObject = await navigator.mediaDevices.getUserMedia({

audio: false,

video: {

width: videoWidth,

height: videoHeight,

},

});

} catch (err) {

console.error(err);

}

}).catch((err) => console.log(err));

};

// We run the function

loadModels();

}, []);

8- Nuestro último paso sería pintar en nuestra etiqueta <canvas> puntos para definir nuestra cara :), para ello llamamos a unas cuantas funciones de face-api.js y listo, además refrescamos los puntos cada 100 milisegundos.

setInterval(async () => {

try {

if (canvasRef.current) {

// https://justadudewhohacks.github.io/face-api.js/docs/globals.html#createcanvasfrommedia

// We fill our canvas tag with the result obtained from our webcam

canvasRef.current.innerHTML = faceApi.createCanvasFromMedia(

videoRef.current

);

// We always want to match the canvas to its display size and we can do that with

faceApi.matchDimensions(canvasRef.current, {

width: videoWidth,

height: videoHeight,

});

// https://justadudewhohacks.github.io/face-api.js/docs/globals.html#detectallfaces

// face-api.js detect face in our video

const detections = await faceApi

.detectAllFaces(

videoRef.current,

new faceApi.TinyFaceDetectorOptions()

)

// Which will draw the different session on the face with dots.

.withFaceLandmarks()

// If we want to see our emotions, we can call

.withFaceExpressions();

// https://justadudewhohacks.github.io/face-api.js/docs/globals.html#resizeResults

const resizedDetections = faceApi.resizeResults(

detections,

{

width: videoWidth,

height: videoHeight,

}

);

if (canvasRef.current) {

canvasRef.current

// The HTMLCanvasElement.getContext() method returns a drawing context on the canvas,

// or null if the context identifier is not supported.

.getContext('2d')

// The clearRect() method in HTML canvas is used to clear the pixels in a given rectangle.

.clearRect(0, 0, videoWidth, videoHeight);

// Draw our detections, face landmarks and expressions.

faceApi.draw.drawDetections(canvasRef.current, resizedDetections);

faceApi.draw.drawFaceLandmarks(

canvasRef.current,

resizedDetections

);

faceApi.draw.drawFaceExpressions(

canvasRef.current,

resizedDetections

);

}

}

} catch (err) {

alert(err);

}

}, 100);

Nuestro componente completo quedaría así:

import * as faceApi from 'face-api.js';

import {useEffect, useRef} from 'react';

function App() {

// useRef returns a mutable ref object whose .current property is initialized to the passed argument (initialValue).

// The returned object will persist for the full lifetime of the component.

const videoRef = useRef();

const canvasRef = useRef();

const videoWidth = 640;

const videoHeight = 480;

// The function passed to useEffect will run after the render is committed to the screen.

useEffect(() => {

const loadModels = async () => {

const MODEL_URL = `http://localhost:3000/models`;

// First we load the necessary models used by face-api.js located in /public/models

// https://github.com/justadudewhohacks/face-api.js-models

Promise.all([

faceApi.nets.tinyFaceDetector.loadFromUri(MODEL_URL),

faceApi.nets.faceLandmark68Net.loadFromUri(MODEL_URL),

faceApi.nets.faceRecognitionNet.loadFromUri(MODEL_URL),

faceApi.nets.faceExpressionNet.loadFromUri(MODEL_URL),

faceApi.nets.ageGenderNet.loadFromUri(MODEL_URL),

faceApi.nets.ssdMobilenetv1.loadFromUri(MODEL_URL),

]).then(async () => {

try {

// With HTML5 came the introduction of APIs with access to device hardware, including the MediaDevices API.

// This API provides access to multimedia input devices such as audio and video.

// Since React does not support the srcObject attribute,

// we use a ref to target the video and assign the stream to the srcObject property.

videoRef.current.srcObject = await navigator.mediaDevices.getUserMedia({

audio: false,

video: {

width: videoWidth,

height: videoHeight,

},

});

} catch (err) {

console.error(err);

}

}).catch((err) => console.log(err));

};

// We run the function

loadModels();

}, []);

// onPlay={handleVideoOnPlay}

// React onPlay event execute this function when a video has started to play

const handleVideoOnPlay = () => {

// Let's draw our face detector every 100 milliseconds in <canvas>

// that is an HTML element which can be used to draw graphics using scripts.

setInterval(async () => {

try {

if (canvasRef.current) {

// https://justadudewhohacks.github.io/face-api.js/docs/globals.html#createcanvasfrommedia

// We fill our canvas tag with the result obtained from our webcam

canvasRef.current.innerHTML = faceApi.createCanvasFromMedia(

videoRef.current

);

// We always want to match the canvas to its display size and we can do that with

faceApi.matchDimensions(canvasRef.current, {

width: videoWidth,

height: videoHeight,

});

// https://justadudewhohacks.github.io/face-api.js/docs/globals.html#detectallfaces

// face-api.js detect face in our video

const detections = await faceApi

.detectAllFaces(

videoRef.current,

new faceApi.TinyFaceDetectorOptions()

)

// Which will draw the different session on the face with dots.

.withFaceLandmarks()

// If we want to see our emotions, we can call

.withFaceExpressions();

// https://justadudewhohacks.github.io/face-api.js/docs/globals.html#resizeResults

const resizedDetections = faceApi.resizeResults(

detections,

{

width: videoWidth,

height: videoHeight,

}

);

if (canvasRef.current) {

canvasRef.current

.getContext('2d') // The HTMLCanvasElement.getContext() method returns a drawing context on the canvas, or null if the context identifier is not supported.

.clearRect(0, 0, videoWidth, videoHeight); // The clearRect() method in HTML canvas is used to clear the pixels in a given rectangle.

// Draw our detections, face landmarks and expressions.

faceApi.draw.drawDetections(canvasRef.current, resizedDetections);

faceApi.draw.drawFaceLandmarks(

canvasRef.current,

resizedDetections

);

faceApi.draw.drawFaceExpressions(

canvasRef.current,

resizedDetections

);

}

}

} catch (err) {

alert(err);

}

}, 100);

};

return (

<div className="App">

{/* To make the elements appear in a row */}

<div style={{display: 'flex'}}>

<video

ref={videoRef}

width="640"

height="480"

playsInline

autoPlay

onPlay={handleVideoOnPlay}

/>

{/* To make our canvas appear on top of our video */}

<canvas style={{position: 'absolute'}} ref={canvasRef}/>

</div>

</div>

);

}

export default App;

Para obtener: